Get to Know Laser Beam a Bit Better Before They Return Again for Their Another Amazing Performance.

The iPhone 12 Pro'south lidar sensor -- the blackness circumvolve at the bottom correct of the camera unit -- opens upwardly AR possibilities and much more.

Patrick Kingdom of the netherlands/CNETApple is bullish on lidar, a technology that's in the iPhone 12 Pro and iPhone xiii Pro, and Apple's iPad Pro models since 2020. Wait closely at the rear camera arrays on these devices and you'll see a lilliputian blackness dot nigh the camera lenses, about the aforementioned size as the flash. That's the lidar sensor, and it delivers a new type of depth-sensing that can make a difference in photos, AR, 3D scanning and possibly even more.

Read more: iPhone 12's lidar tech does more than improve photos. Cheque out this absurd political party trick

If Apple has its way, lidar is a term you'll keep hearing. It'southward already a factor in AR headsets and in cars. Do yous need it? Perchance not. Allow'due south break down what we know, what Apple is using information technology for, and where the technology could go side by side. And if you're curious what information technology does right now, I've spent hands-on time with the tech, too.

What does lidar mean?

Lidar stands for low-cal detection and ranging, and has been effectually for a while. It uses lasers to ping off objects and render to the source of the light amplification by stimulated emission of radiation, measuring altitude by timing the travel, or flying, of the lite pulse.

Trying some of the LiDAR-enabled AR apps I can find for the 2020 iPad Pro, to show meshing. Here's one called Primer, an early build to exam wallpaper on walls pic.twitter.com/SatibguyTm

— Scott Stein (@jetscott) April fourteen, 2020

How does lidar piece of work to sense depth?

Lidar is a type of fourth dimension-of-flying camera. Some other smartphones measure depth with a single lite pulse, whereas a smartphone with this type of lidar tech sends waves of light pulses out in a spray of infrared dots and tin can measure each 1 with its sensor, creating a field of points that map out distances and can "mesh" the dimensions of a space and the objects in it. The light pulses are invisible to the human center, but y'all could see them with a night vision camera.

Isn't this like Face up ID on the iPhone?

It is, just with longer range. The idea's the same: Apple'south Face ID-enabling TrueDepth camera likewise shoots out an assortment of infrared lasers, but can only work up to a few feet away. The rear lidar sensors on the iPad Pro and iPhone 12 Pro work at a range of upwards to 5 meters.

Lidar's already in a lot of other tech

Lidar is a tech that'south sprouting upward everywhere. It's used for self-driving cars, or assisted driving. It's used for robotics and drones. Augmented reality headsets like the HoloLens 2 take similar tech, mapping out room spaces before layering 3D virtual objects into them. There's even a VR headset with lidar. But it as well has a pretty long history.

Microsoft'due south erstwhile depth-sensing Xbox accessory, the Kinect, was a camera that had infrared depth-scanning, too. In fact, PrimeSense, the company that helped make the Kinect tech, was acquired past Apple tree in 2013. At present, nosotros have Apple tree's face-scanning TrueDepth and rear lidar camera sensors.

The iPhone 12 Pro and 13 Pro cameras piece of work better with lidar

Time-of-flight cameras on smartphones tend to be used to improve focus accurateness and speed, and the iPhone 12 Pro did the aforementioned. Apple tree promises better low-light focus, up to half dozen times faster in low-calorie-free atmospheric condition. The lidar depth-sensing is likewise used to ameliorate nighttime portrait mode effects. So far, information technology makes an touch on: read our review of the iPhone 12 Pro Max for more. With the iPhone 13 Pro, it's a like story: the lidar tech is the aforementioned, fifty-fifty if the camera technology is improved.

Better focus is a plus, and in that location's besides a chance the iPhone 12 Pro could add more 3D photo data to images, besides. Although that element hasn't been laid out all the same, Apple's front-facing, depth-sensing TrueDepth camera has been used in a similar mode with apps, and third-political party developers could dive in and develop some wild ideas. It's already happening.

It also greatly enhances augmented reality

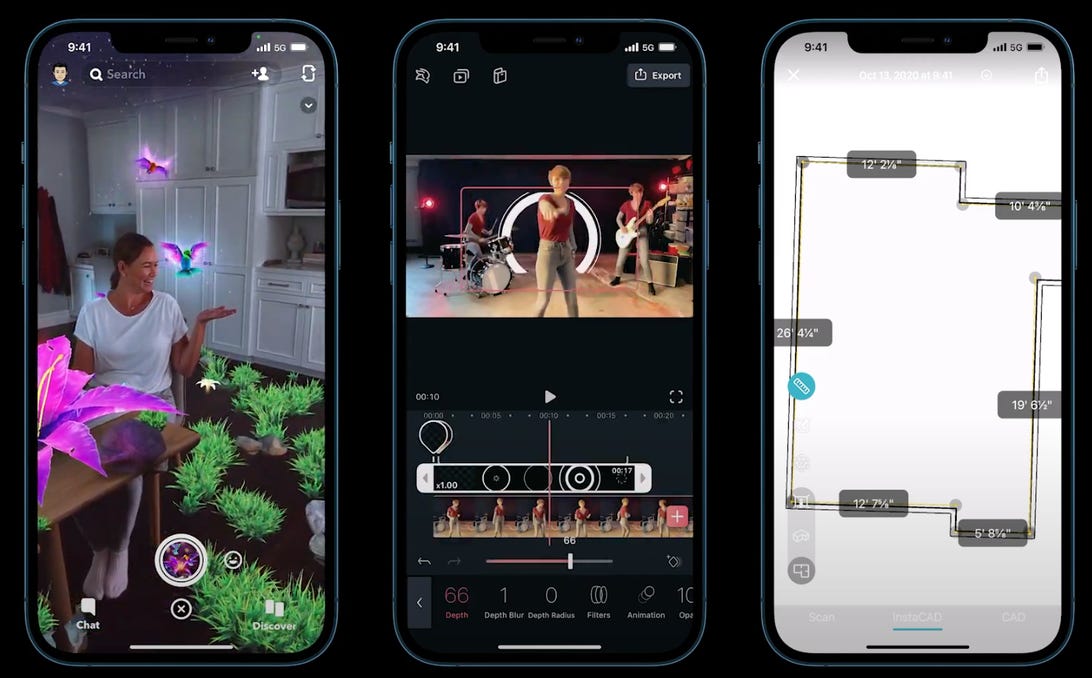

Lidar allows the iPhone and iPad Pros to start AR apps a lot more quickly, and build a fast map of a room to add more detail. A lot of Apple's core AR tech takes advantage of lidar to hide virtual objects behind existent ones (called occlusion), and place virtual objects within more than complicated room mappings, like on a table or chair.

I've been testing it out on an Apple tree Arcade game, Hot Lava, which already uses lidar to browse a room and all its obstacles. I was able to place virtual objects on stairs, and accept things hibernate backside real-life objects in the room. Await a lot more AR apps that will kickoff adding lidar back up like this for richer experiences.

Snapchat's next wave of lenses will first adopting depth-sensing using the iPhone 12 Pro's lidar.

SnapchatJust in that location's extra potential across that, with a longer tail. Many companies are dreaming of headsets that volition blend virtual objects and real ones: AR glasses, being worked on past Facebook, Qualcomm, Snapchat, Microsoft, Magic Jump and near likely Apple and others, will rely on having advanced 3D maps of the globe to layer virtual objects onto.

Those 3D maps are being built now with special scanners and equipment, almost like the world-scanning version of those Google Maps cars. But there's a possibility that people's own devices could eventually help crowdsource that info, or add extra on-the-wing data. Over again, AR headsets similar Magic Leap and HoloLens already prescan your environment earlier layering things into information technology, and Apple's lidar-equipped AR tech works the aforementioned manner. In that sense, the iPhone 12 and 13 Pro and iPad Pro are like AR headsets without the headset role... and could pave the way for Apple'south first VR/AR headset, expected either this or next. For an example of how this would work, look to the high-end Varjo XR-three headset, which uses lidar for mixed reality.

A 3D room scan from Occipital's Canvas app, enabled past depth-sensing lidar on the iPad Pro. Await the aforementioned for the iPhone 12 Pro, and possibly more.

Occipital3D scanning could be the killer app

Lidar can exist used to mesh out 3D objects and rooms and layer photo imagery on height, a technique chosen photogrammetry. That could be the next moving ridge of capture tech for practical uses like dwelling improvement, or even social media and journalism. The power to capture 3D data and share that info with others could open these lidar-equipped phones and tablets to be 3D-content capture tools. Lidar could likewise be used without the camera element to acquire measurements for objects and spaces.

I've already tried a few early lidar-enabled 3D scanning apps on the iPhone 12 Pro with mixed success (3D Scanner App, Lidar Scanner and Record3D), but they can exist used to scan objects or map out rooms with surprising speed. The 16-pes effective range of lidar's scanning is plenty to reach across about rooms in my house, only in bigger outdoor spaces it takes more moving around. Again, Apple's front-facing TrueDepth camera already does similar things at closer range. Over time, it'll exist interesting to see if Apple ends upward putting 3D scanning features into its ain camera apps, putting the tech more front end-and-centre. For now, 3D scanning is getting improve, simply remains a more niche characteristic for virtually people.

Spotter this: Our in-depth review of the iPhone 12 and 12 Pro

Apple isn't the outset to explore tech like this on a telephone

Google had this same idea in listen when Project Tango -- an early AR platform that was only on 2 phones -- was created. The advanced photographic camera array besides had infrared sensors and could map out rooms, creating 3D scans and depth maps for AR and for measuring indoor spaces. Google's Tango-equipped phones were short-lived, replaced by calculator vision algorithms that have done estimated depth sensing on cameras without needing the same hardware. This fourth dimension, yet, lidar is already finding its style into cars, AR headsets, robotics, and much more.

Source: https://www.cnet.com/tech/mobile/lidar-is-one-of-the-iphone-ipad-coolest-tricks-its-only-getting-better/

0 Response to "Get to Know Laser Beam a Bit Better Before They Return Again for Their Another Amazing Performance."

Enregistrer un commentaire